Cryptocurrency Privacy Technologies: Bulletproof Range Proofs

March 18, 2024 by patrickd

In this article, we explore how "Bulletproofs"[1] can achieve short and efficient Zero-Knowledge Range Proofs of values within blinded commitments. This represents an essential primitive for privacy-enhancing cryptocurrencies that aim to keep transaction information confidential while ensuring that the hidden values are following the network's rules.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

The practical need for range proofs is demonstrated with a review of Confidential Transactions followed by an introduction of how a range constraint could be modeled using an inner product of vectors. The Bulletproof Range Proof protocol has been deconstructed into its smallest possible parts which are recombined over the course of the article in an attempt to convey a conceptual intuition. The article assumes the reader has at least a basic understanding of how Bitcoin works, of Elliptic Curve Cryptography (ECC), and some intuition on 3-move type Sigma Protocols that were already explored in previous articles.

Introduction

Confidential Transactions in Review

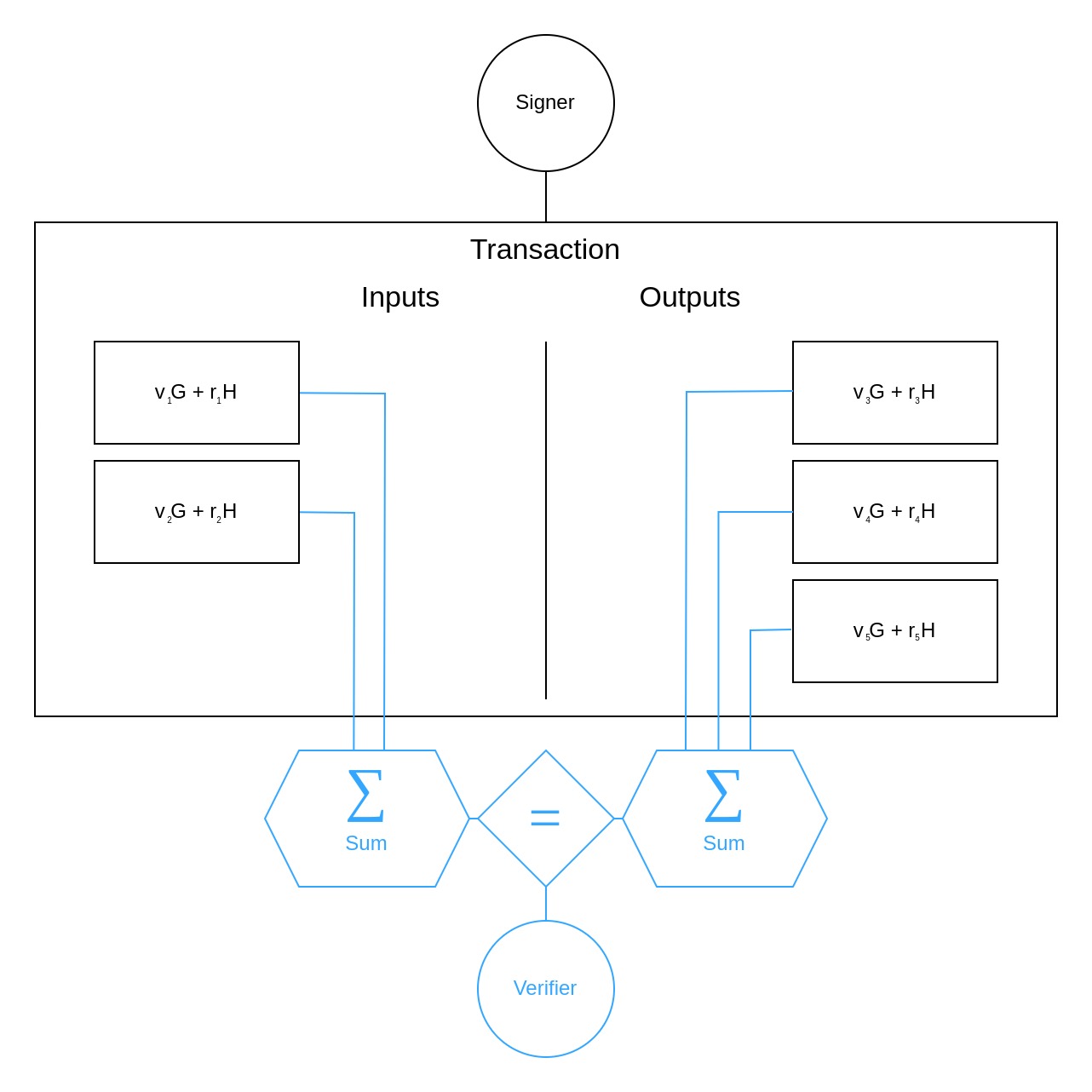

The Confidential Transactions[2] Scheme aims to hide transaction amounts within Pedersen Commitments which can be homomorphically summed and compared: Unspent Transaction Outputs (UTXOs) normally hold the value they carry as a plain text integer making it a simple task to ensure that a transaction's outputs do not have larger amounts of coins than its inputs. The scheme instead uses ECC to replace the plaintext amount with as a scalar value for the generator point and blinds it with a random blinding factor of another generator point creating a commitment .

A blinding factor is required to ensure that the hidden value can not simply be discovered by brute force. Since coin amounts tend to be simple and small numbers, it would be easy for an attacker to guess until the same commitment of the user is found. To prevent this, a large random number is used to make such attacks impractical. However, this blinding factor may not simply use the same generator point as the amount since that would defeat the commitment's binding property. To prevent the commitment creator from claiming a different and at a later point, a second generator where the logarithmic relationship to the first one is unknown must be used. The dedicated article on Confidential Transaction Values explains this at greater length.

Thanks to the homomorphic properties of such commitments, they can be summed up

and their sums can be compared

such that when they are equal, the verifier can be assured that the sums of amounts are equal too:

Indeed, this also requires the blinding factors of both sums to be equal and you might be wondering how that's possible when they're supposed to be random. The trick is that the last factor, in the above case , is chosen specifically to ensure equality, instead of being random. Done appropriately, this does not affect the commitment's security.

The Need for Range Proofs

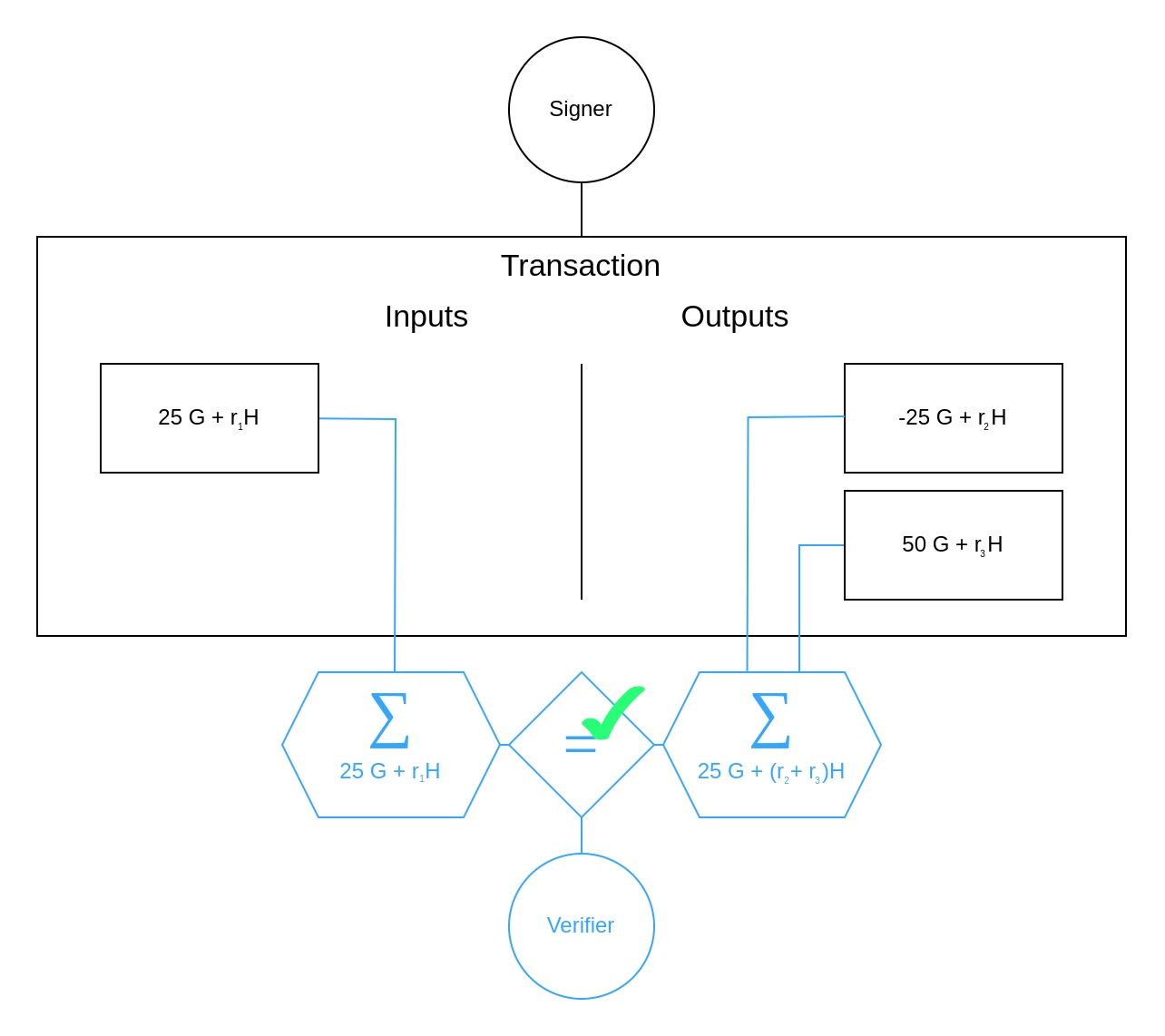

The issue with this scheme is that it falls apart once negative amounts are at play: What if Alice has a UTXO with coins and makes a transaction where she spends this output while creating two new UTXOs. But one of these has a blinded amount of and the other a positive amount of . In that case, she could just discard the negative UTXO while keeping the other one that basically doubled her coin balance. To a Verifier this would look perfectly fine since, indeed .

Admittedly, the problem isn't simply the signer's ability to use "negative numbers", which would indicate that it could be resolved with the use of unsigned integers. The real issue is that we are dealing with scalar values that can have an effect just like negative numbers because they're part of a field . The constant is the group order shared by both generators and at which a scalar wraps back to :

Most cryptocurrencies make use of the curve which has a group order of

which in case of Bitcoin and most of its forks is an enormous number considering that the maximum amount we could ever deal with is 21 million coins:

So in order to prevent the "negative numbers" issue we could restrict the amount to the maximum and the number of UTXO outputs per transaction such that is always true. With that, the sum of output amounts should never be able to wrap.

Blinding factors are intentionally large numbers and will likely wrap around multiple times, but this does not matter since all we need them to do is making it hard to unblind the actual amount and for their sums to be equal when necessary.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

With the number of UTXO outputs being public, restricting is trivial. Restricting the coin amount without revealing it, on the other hand, is a surprisingly difficult task. The solution proposed in the original Confidential Transactions scheme made use of Borromean Ring Signatures[3] to achieve it, a form of Zero-Knowledge Proof which comes at the great disadvantage of linear growth in proof size. Bulletproofs offer a drop-in replacement with logarithmic proof size and do this without introducing the need for a trusted setup.

Range Constraints via Inner Products

Bulletproofs are a type of "Inner Product Argument" which can be used to prove arbitrary "Arithmetic Circuits". More specifically, it enables us to make Proofs of Knowledge on statements that we can get into the form of an Inner Product () on two vectors and of length :

Practically, a Prover can commit to and and show that . We can use this to build a Range Proof by converting the transaction amount into its binary representation where each vector element is a bit for an exponential and the vector length determines the range boundary :

The resulting Inner Product, and therefore the transaction value , cannot possibly be larger than with these two vectors, therefore enforcing the range.

Example

We assume the Verifier demands the transaction amount to be within the range with the vectors fixed at length , which means anything from to is a valid value.

Both Prover and Verifier know that is static with elements . So the Prover has to determine for their commitment to the amount :

Let's say that the Prover sends commitments A, B, V for , and respectively to start an interactive proof demonstrating . Then, if , the Verifier can be assured that the hidden amount is within the required range.

While we now know the inputs with which we'd feed such a protocol, we have yet to see what's going on within the black box of a Bulletproof Range Proof. How are we enforcing that ? How do Inner Product Arguments work in the first place? How do they manage to have logarithmic proof size complexity?

Bulletproofs

Zero-Knowledge Inner Product Argument

Starting from the familiar place of 3-move type Zero-Knowledge Protocols, let's take a look at a proof demonstrating that while keeping , , and secret. As usual, hiding these values is achieved using blinded commitments:

Where and are vectors of generators , with unknown logarithmic relationship. Assuming a simple case of two-dimensional vectors () we can expand this to:

This alone isn't enough to achieve Zero-Knowledge though, we further need random vectors and , chosen and committed by the prover as:

Specifically, the protocol has the following structure:

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Computes | |

| Computes | |

| Chooses random | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Sends | Knows |

| Computes | |

Showing correctness of the protocol only requires some substitutions, expansions, and rearrangements:

Expanding :

By remembering that it becomes obvious that both sides are the same. The equation holds.

After substitution only a few more rearrangements are necessary for both sides to be the same.

To more fundamentally understand how this proof works, consider vector polynomials of the form where each coefficient is a vector . When computing the inner product of two such vector polynomials (), we can do this by either first evaluating each polynomial for a specific (ie. ) and compute the inner product of the results . Or we can determine the resulting vector polynomial of the inner product and come to the same final result when evaluating for a specific .

The bulletproofs paper actually defined the above equation as that will result in lacking which is required for the proof's correctness.

In the case of the above proof specifically, we can notice that and are the result of evaluating such polynomials with the challenge provided by the Verifier.

Based on these, we can determine the inner product polynomial :

As you might notice, we committed to with , and to , with and respectively.

In other words, the proof works by first having the Prover commit to the vector polynomial via its coefficients. After receiving the Validator's challenge , we respond with the result of evaluating , . The Validator can then check whether the inner product of these () matches with what is produced by evaluating using the commitments , , and .

Recursive Inner Product Argument

While the above proof allows us to demonstrate in Zero-Knowledge, by proving instead, it still requires sending the vectors and of length which results in linear communication complexity. The solution to achieving logarithmic proof sizes is the "Folding Argument" presented in "Efficient zero-knowledge arguments for arithmetic circuits in the discrete log setting" (opens in a new tab)[4] by Bootle et al, which was further improved by the Bulletproofs paper.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

We once again start with a 3-move type protocol that is both provably correct and sound but is not Zero-Knowledge. While that means that information on the input parameters can be extracted from the transcript, this does no harm thanks to the fact that we are merely using it as a sub-routine in the above Zero-Knowledge proof where the inputs () are already blinded.

For simplicity we assume that the two vectors and merely have a length of . The goal is to demonstrate that using the commitment:

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Sends | Knows |

What you should notice here is the fact that we started from 2-dimensional vectors and which the prover then turned into 1-dimensional values , respectively. Furthermore, when generalizing this proof to work with vectors of arbitrary size you can observe that the resulting vectors , will always be half the size of the starting vectors , :

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Sends | Knows |

Note that the generalization splits vectors into two halves, a left () and a right () one. For example, in the 4-dimensional case where and the following applies:

Here, you may notice that was rearranged in such a manner that the generator vectors () too were halved in size. This leaves us with a commitment that looks very similar to our starting point:

In other words, in order to proof we're sending vectors and for which the validator calculates . This is similar to how we could have convinced the verifier of by simply sending the full vectors and . And that's where the trick is: As long as the vectors' , dimension is we don't send them but instead execute the same proof recursively. Each time the vectors that would need to be proven in the final validation are halved in size and we simply stop once we've reached the point where they have a single dimension.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

Let's look at how recursion works in action by starting from 4-dimensional vectors for the inputs and :

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Sends | Knows |

Under the assumption that we're starting from vectors with exponential size () this means that every time we double , the communication complexity of this protocol only increases by a factor of 1 (ie. logarithmic).

Range Constraint Circuit

Combining everything so far, we achieved Zero-Knowledge Proofs for Inner Products with logarithmic efficiency. Unfortunately, that doesn't mean we can simply plug the bit-vector and exponentials vector into them and be done with it. After all, there's nothing forcing the Prover to actually use vectors of this format and due to Zero-Knowledge, there's no way for the Verifier to check them as things stand.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

To fix this, we first need to determine the constraints that need to hold for the Verifier to be assured of the input's validity. Next, we have to combine these conditions back into a form provable by an Inner Product Argument. We can start with a condition that requires our input vector to be multiplied with a constant vector:

To ensure that we can compute a second vector by subtracting 1 for each dimension ( where ). Multiplying each entry of these two vectors must always result in a vector of zeros, adding the last two conditions we need:

Example

Continuing with the assumption that with , we can calculate :

Then :

We can join all these into a single statement using the random linear combination technique that you'll likely recognize from the 3-move type protocols we've already seen. In short: It's adding up everything while preventing the Prover from cheating. Let's look at an extremely simple example to get an intuition for it.

If the statements we want to combine are , the Prover makes the first move by committing to them. The Verifier sends a random challenge value to the Prover which he uses to combine everything as . Without the challenge (ie. ), the Prover could simply have committed to values for and that result in when combined and break the condition that both should have been equal to zero. With the challenge, the Prover will be unable to pick and in a manner that would have them cancel out since he doesn't know what will be.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

When involving vectors, a condition like can also be seen as conditions that we could combine into a single statement with linear combination. Coincidentally, such a statement is a sum, just like an inner product is a sum too. As such, we can exploit linear combinations of vectors to end up with an inner product statement:

The same principle can be applied after rearranging to :

This will still leave us with 3 separate conditions, though each is in the form of an inner product now:

Once again, we can apply the random linear combination technique to combine them all into a single statement:

Finally, this statement needs to be rearranged back into the form of a single inner product. But we need to do this in a way where each side of the inner product only has one of the secret inputs (). All the other terms () should be non-secret values that the verifier is able to compute on their own. The algebra necessary for this is a bit tedious (click to expand and see it), but the end result is this:

To rearrange the statement into a single inner product, we start with taking note of the fact that a complex inner product like can be split into two simpler ones

Therefore, can be rewritten as

Resulting in

of which we can move over to the other side of the equation:

We're able to use the same technique that was applied to split the inner product apart, for recombining simpler inner product terms into a complex one. But for that, we first have to move the factors into the inner product, which can be done simply by adding it to either side of the inner product.

With that, we can rewrite the statement as

where all except the third inner product can now be combined into a single inner product since they all share on the left side:

To merge the last remaining inner product, we want to have its right side match with the other inner products right side. We'll do this by taking note of the fact that is the same as .

Resulting in

which allows us to add the missing terms by adding to both sides of the equation:

For readability, we'll shorten the right side of the equation with . Then we rearrange by combining until we end up with the desired single inner product argument on the left side of the equation:

That only leaves us to deal with

which can be simplified with the same techniques as above:

Adjusting the Zero Knowledge proof

We've managed to bring the statement into the format of an inner product where the Prover begins by creating a bit-vector such that . From this, the Prover next determines the second vector by computing . The question now is: How do we adjust the Zero-Knowledge Protocol to accept these vectors as input under the constraints we've found?

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

Looking back you should notice how the vector polynomials and were basically random linear combinations of the input vectors and with the random blinding vectors and respectively.

We'll "wrap" the constraints right around these, replacing them as our source for and :

Similar to before, will be the polynomial resulting from the inner product of both functions:

What changes though, is that won't simply be the value of but rather , which requires adjusting the equations that the Validator verifies appropriately. The values for , can still be computed from the coefficients of and :

Specifically, the Zero-Knowledge protocol proving for a commitment has the following structure with all necessary changes applied:

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Computes vector | |

| Computes vector | |

| Chooses random vectors | |

| Chooses random factors | |

| Sends | Knows |

| Chooses a random challenge scalars | |

| Knows | Sends |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Chooses random factors | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Sends | Knows |

| Computes | |

| Computes | |

For we can see that the equation's are indeed correct:

Expanding :

Looking at is a bit more interesting since we're introducing a new generators vector

If the equality would not be able to hold.

The reason for introducing with instead of applying it in the equation directly is that this generators vector will be used as part of the sub-routine that makes the protocol logarithmic.

Bringing it all together

Let's bring everything we've discussed together in a full Zero-Knowledge Range Proof protocol with logarithmic communication efficiency. As before we'll start from a secret value that we've already committed to with for which we want to prove that (ie. ).

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Computes vector | |

| Computes vector | |

| Chooses random vectors | |

| Chooses random factors | |

| Sends | Knows |

| Chooses a random challenge scalars | |

| Knows | Sends |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Chooses random factors | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | Computes |

| Computes | Computes |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Computes | |

| Computes | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Computes | |

| Computes | |

| Sends | Knows |

As shown by the , the vectors are no longer transferred to prevent the transcript from being linear in size to . With that, the prover can no longer compute the inner product () of them, so instead it's computed by the Prover and committed to with a Verifier chosen factor . While the Prover is able to create with knowledge of and , the Verifier can reproduce it while enforcing

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

by computing the left hand side as from the already available information. Assuming equality, we can then use the right hand side to produce :

Therefore is already implicitly guaranteed by the success of the logarithmic inner product protocol via .

Conclusion

Range Proofs built with Bulletproofs require sending group elements and field elements (). This is really impressive compared to linear solutions, but still quite inefficient compared to schemes such as SNARKs. But those require a trusted setup, a property that is generally undesirable for use in cryptocurrencies.

It should also be noted that verifying multiple Bulletproofs at once can be done in a very efficient manner. Not only can multiple separate proofs be efficiently batch-verified, they can also be aggregated (combined into a single proof). An aggregated proof containing range proofs only requires an additional group elements over a single range proof.

Appendix

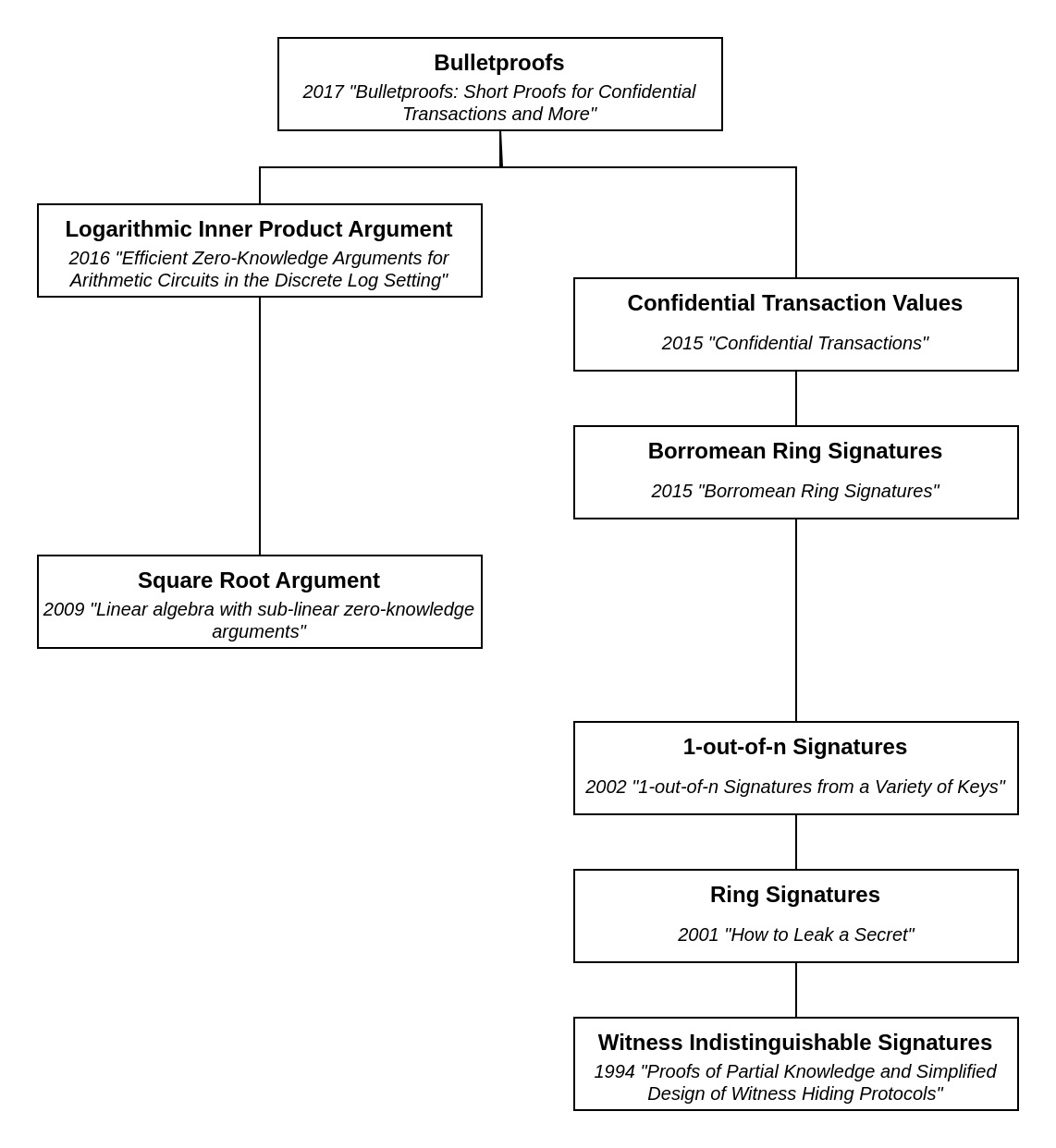

Tech-Tree

Note that this Tech-Tree omits detailed dependencies to maintain readability.

References

| [1] | Bünz, B., Bootle, J., Boneh, D., Poelstra, A., Wuille, P. and Maxwell, G., 2018, May. Bulletproofs: Short proofs for confidential transactions and more. In 2018 IEEE symposium on security and privacy (SP) (pp. 315-334). IEEE. |

| [2] | Greg Maxwell, 2016. Confidential transactions. https://people.xiph.org/~greg/confidential_values.txt (opens in a new tab) |

| [3] | Maxwell, G. and Poelstra, A., 2015. Borromean ring signatures. Accessed: Jun, 8, p.2019. |

| [4] | Bootle, J., Cerulli, A., Chaidos, P., Groth, J. and Petit, C., 2016. Efficient zero-knowledge arguments for arithmetic circuits in the discrete log setting. In Advances in Cryptology–EUROCRYPT 2016: 35th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Vienna, Austria, May 8-12, 2016, Proceedings, Part II 35 (pp. 327-357). Springer Berlin Heidelberg. |

| [5] | dalek cryptography, Cathie Yun, 2020. https://doc-internal.dalek.rs/bulletproofs/ (opens in a new tab) |

| [6] | Benedikt Bünz (Stanford University), May, 2018. Bulletproofs: Short Proofs for Confidential Transactions and More. IEEE Symposium on Security and Privacy YouTube channel. https://www.youtube.com/watch?v=Adrh6BCc_Ao (opens in a new tab) |

| [7] | Cathie Yun, June, 2018. Cathie Yun on Bulletproofs: Short Proofs for Confidential Transactions and More [PWL SF] 3/2018. PapersWeLove San Francisco Chapter YouTube channel. https://www.youtube.com/watch?v=BBe1JzUxSB8 (opens in a new tab) |

| [8] | Benedikt Bünz (https://crypto.stanford.edu/~buenz/ (opens in a new tab)), April, 2018. Benedikt Bünz : Bulletproofs. SF Bitcoin Developers YouTube Channel. https://www.youtube.com/watch?v=gMI8dkwGGcw (opens in a new tab) |

| [9] | Hoffmann, M., Klooß, M. and Rupp, A., 2019, November. Efficient zero-knowledge arguments in the discrete log setting, revisited. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security (pp. 2093-2110). |

| [10] | Mary Maller (Ethereum Foundation), May, 2021. Inner Product Arguments - Mary Maller. ZKProof Standards YouTube Channel. https://www.youtube.com/watch?v=dD_0Vn4BhmI (opens in a new tab) |

| [11] | Mary Maller (Ethereum Foundation), August, 2020. Mary Maller- "Inner Product Arguments". For IC3 Blockchain Camp 2020 on IC3 Initiative for Cryptocurrencies and Contracts YouTube channel. https://www.youtube.com/watch?v=Ne3XpSdMmzM (opens in a new tab) |